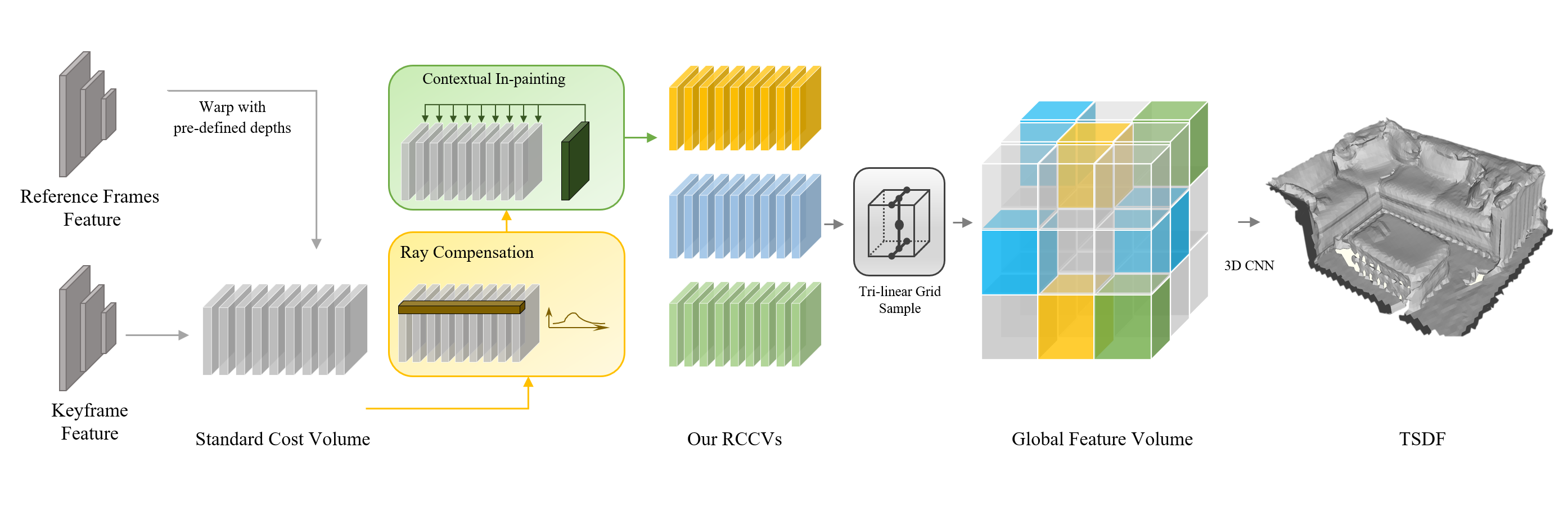

Pipeline overview

CVRecon Architecture: We first build standard cost volumes for each keyframe with reference frames. Novel

1Clemson University

2Microsoft

3Carnegie Mellon University

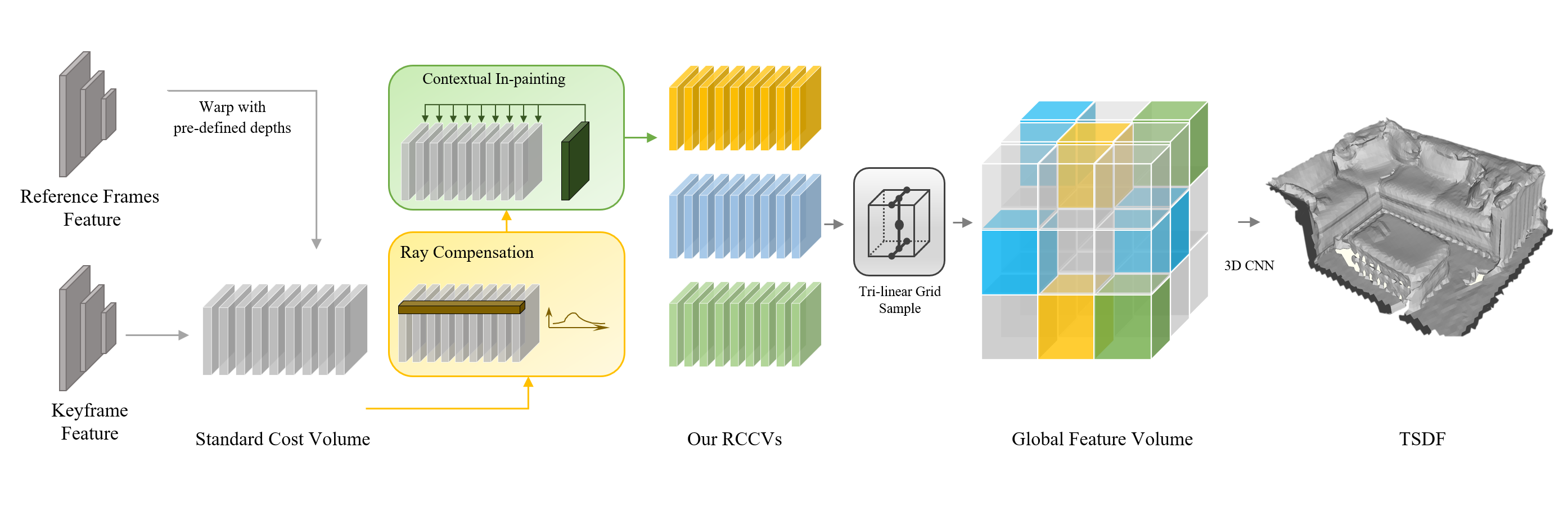

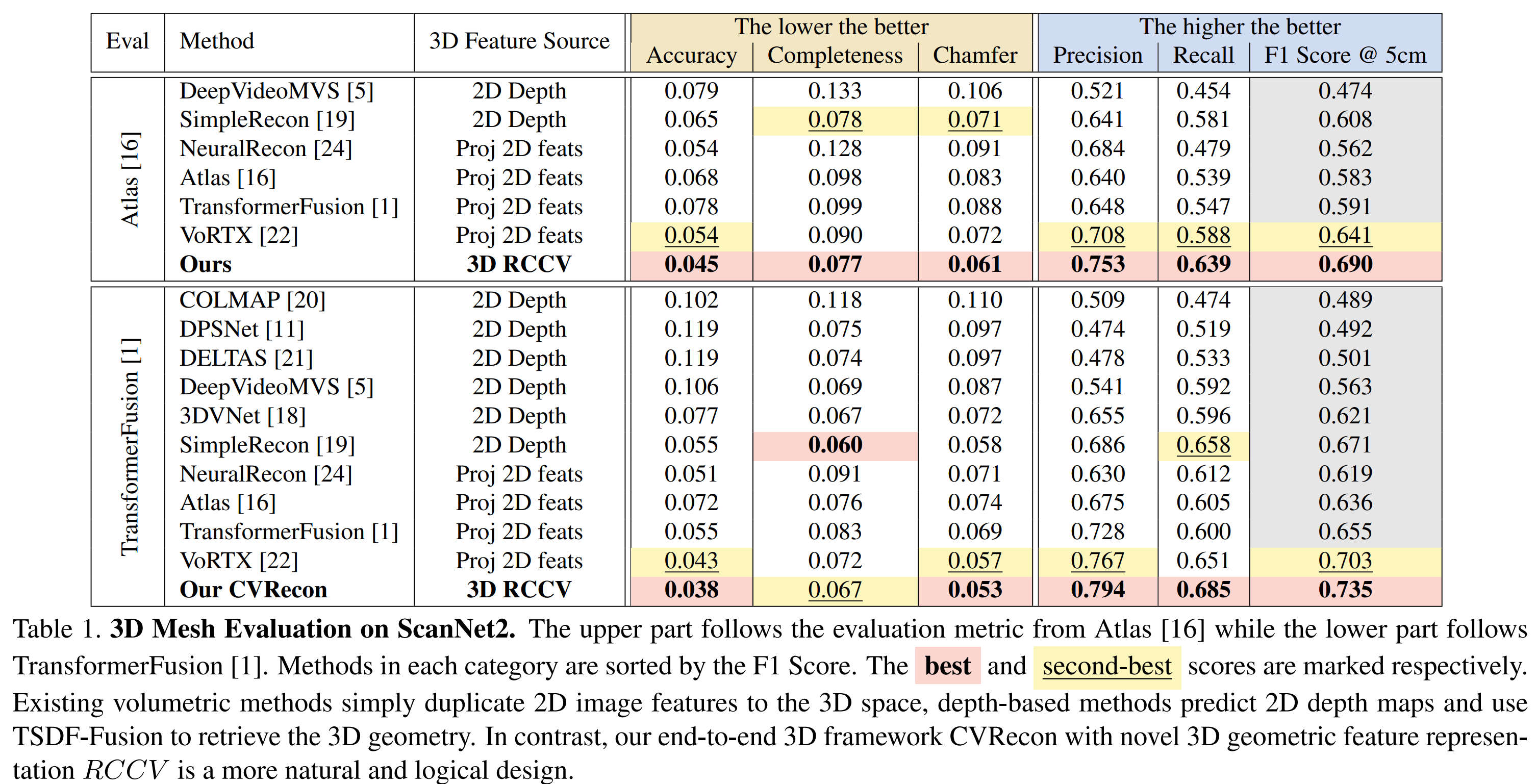

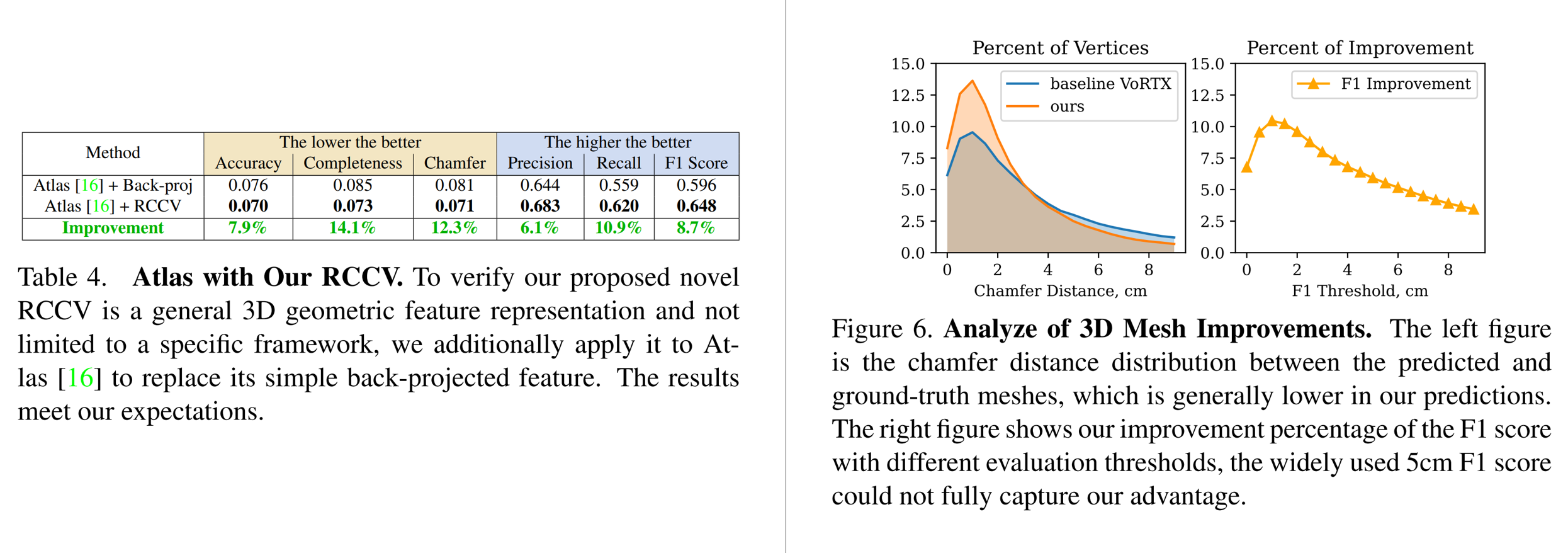

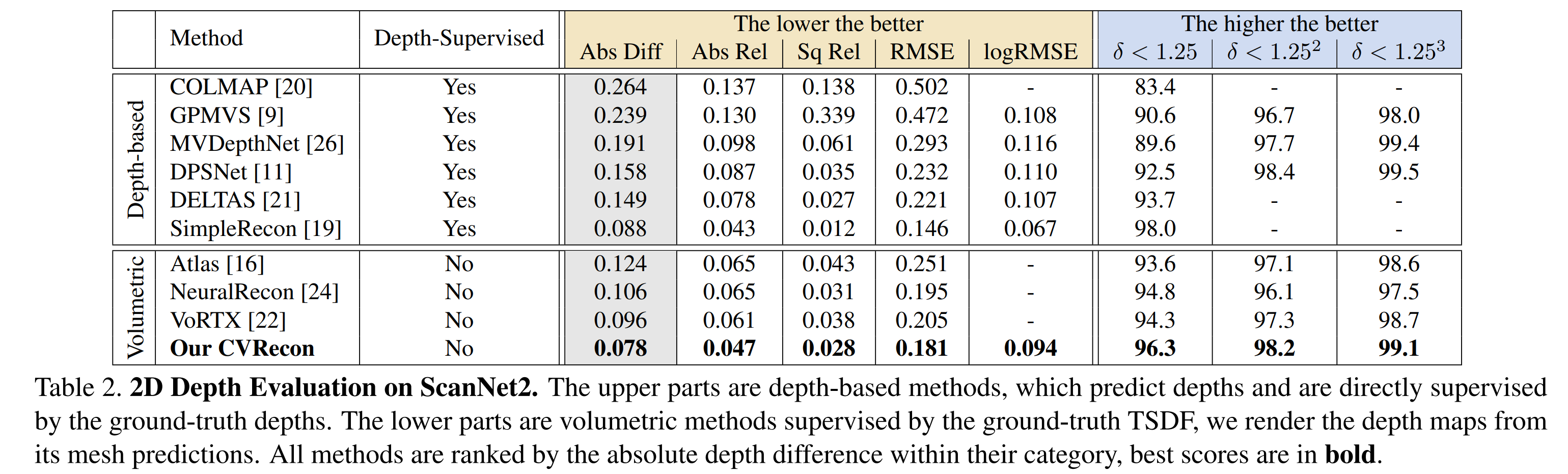

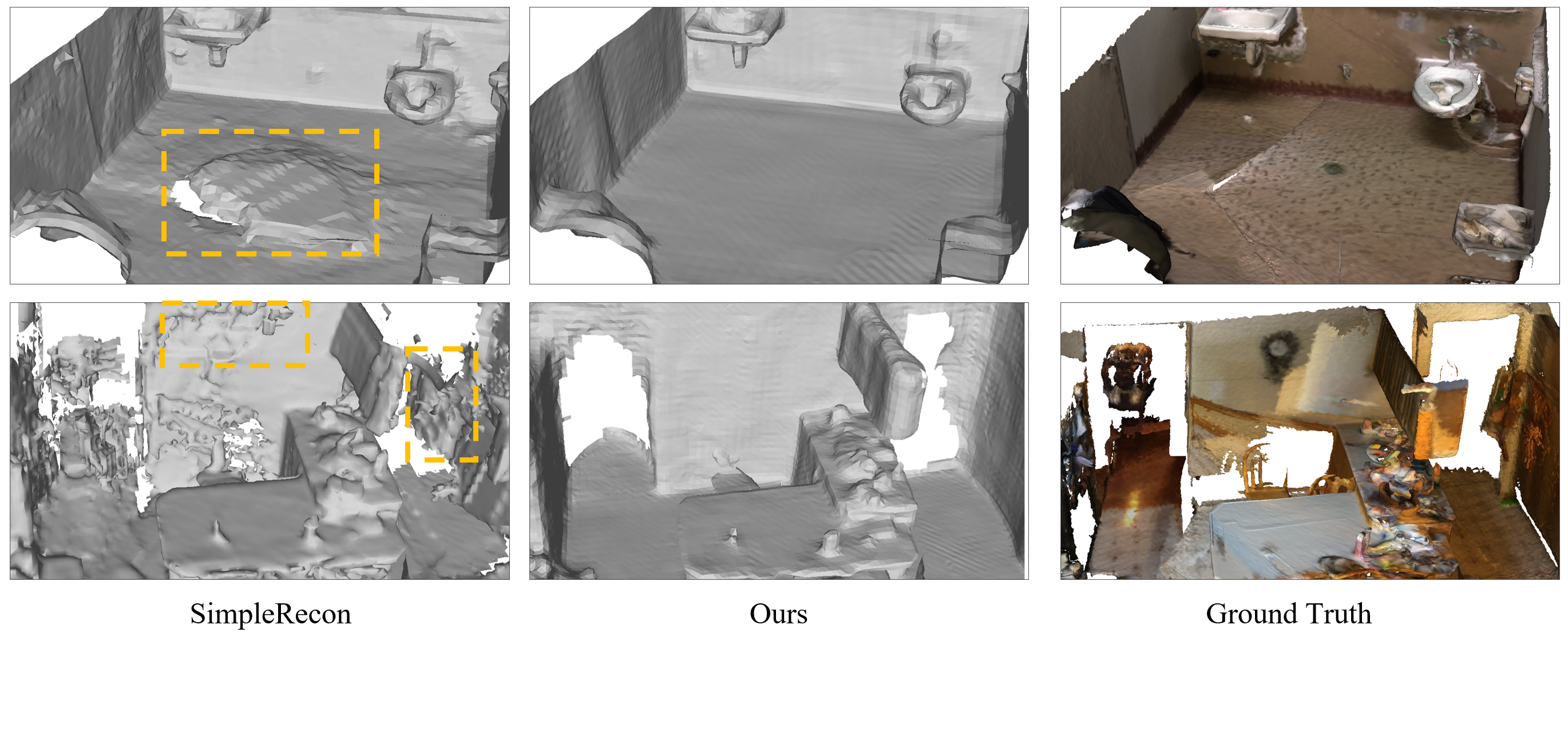

Recent advances in neural reconstruction using posed image sequences have made remarkable progress. However, due to the lack of depth information, existing volumetric-based techniques simply duplicate 2D image features of the object surface along the entire camera ray. We contend this duplication introduces noise in empty and occluded spaces, posing challenges for producing high-quality 3D geometry. Drawing inspiration from traditional multi-view stereo methods, we propose an end-to-end 3D neural reconstruction framework CVRecon, designed to exploit the rich geometric embedding in the cost volumes to facilitate 3D geometric feature learning. Furthermore, we present Ray-contextual Compensated Cost Volume (

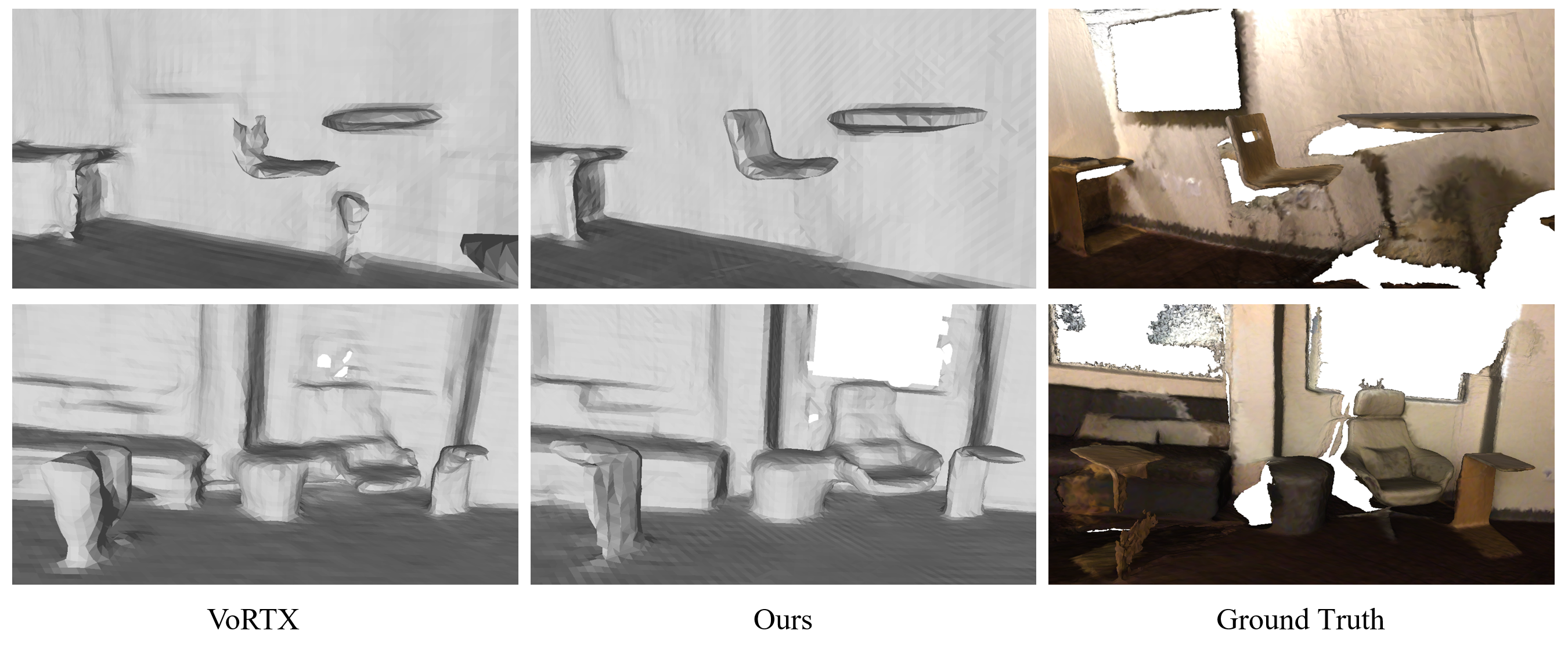

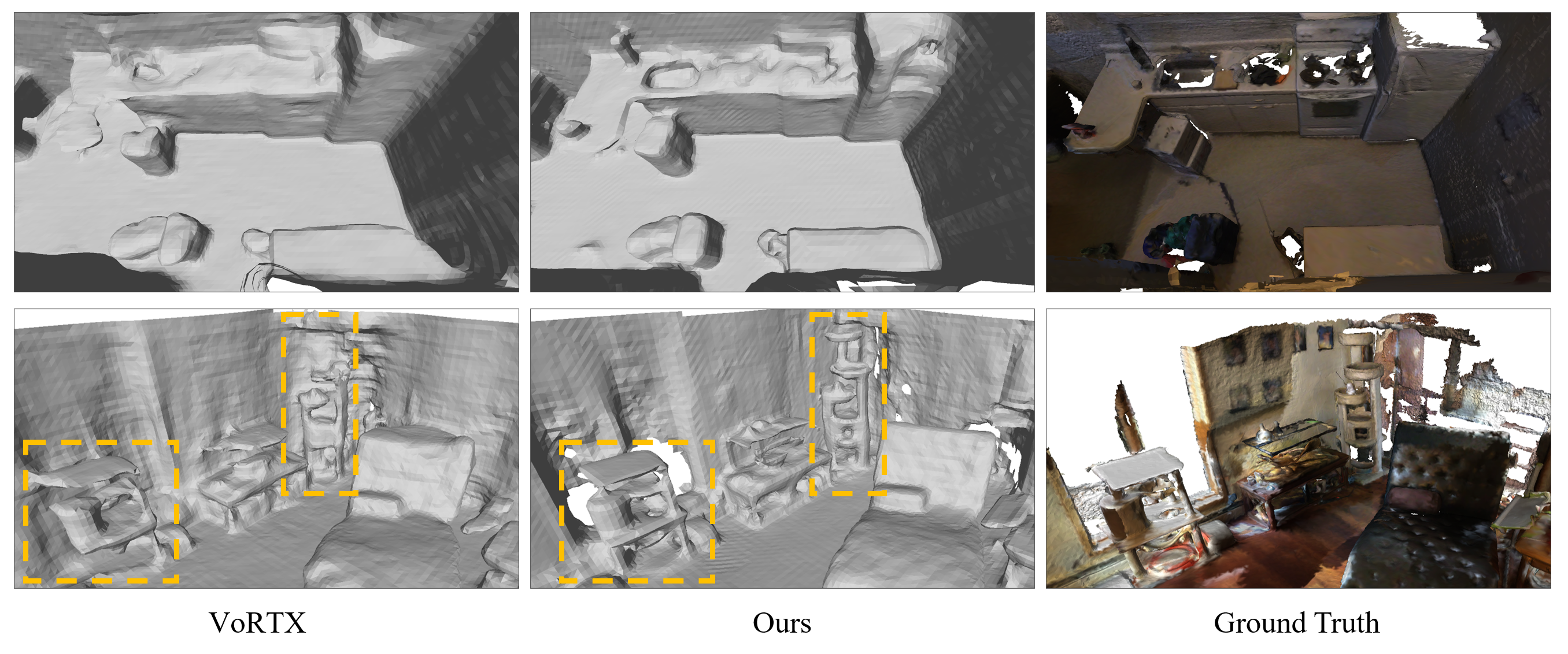

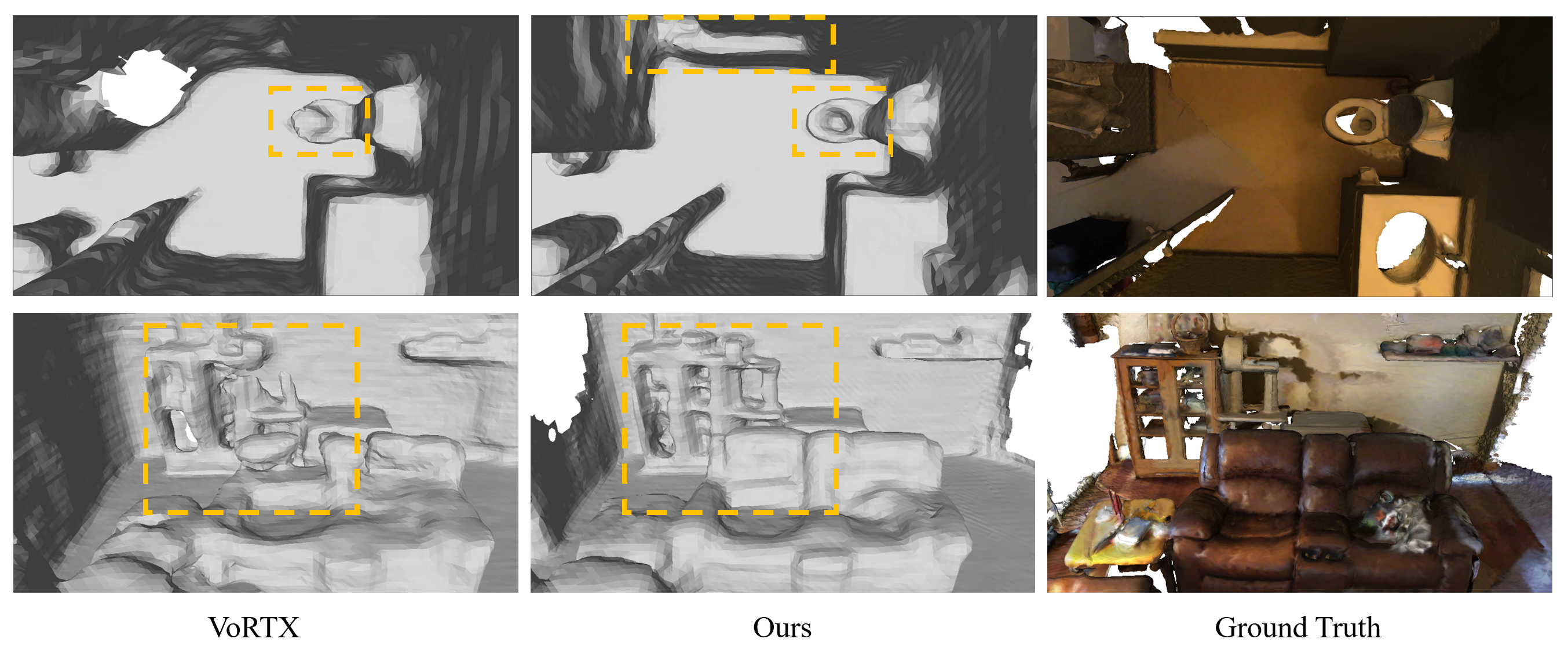

The only difference between our CVRecon and the VoRTX is we use our

CVRecon Architecture: We first build standard cost volumes for each keyframe with reference frames. Novel

@misc{feng2023cvrecon,

title={CVRecon: Rethinking 3D Geometric Feature Learning For Neural Reconstruction},

author={Ziyue Feng and Leon Yang and Pengsheng Guo and Bing Li},

year={2023},

eprint={2304.14633},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Welcome to checkout my work on Depth Prediction of Dynamic Objects (DynamicDepth [ECCV2022]) and Camera-LiDAR Fusion for Depth Prediction (FusionDepth [CoRL2021]).